Most AI practitioners will argue that the risk to humanity from AI doesn’t (and won’t) come from an AI waking up one day, deciding that the best way to solve the world’s problems is to wipe out humanity and then serendipitously finding that it’s in control of the world’s nuclear weapons. On the principle that cock-up trumps conspiracy, pretty much every time, we’re far more likely to take a range of hits from the misapplication of an AI that’s either too stupid1 to do the job that’s been asked of it or where those deploying it are incapable of understanding its limitations (or indeed don’t care, as long as they’ve cashed out before it all falls apart). Broadly speaking, machine systems fail for one or more of these reasons:

- Failure of Data Quality: This includes both the limitations of sensors (e.g. IoT devices or vehicle sensors) and the limitations of any data set provided to a machine learning system: in either case, the data available is not adequate for the task, however you process it.

- Failure of Discrimination: This is where system, for whatever reason, is unable to create a significant and unambiguous outcome, because the data are insufficiently diverse to generate robust results or there is just too much noise to enable it to extract relevant information from the background. As a side note here, one of the greatest achievements of the Event Horizon Telescope project has been the way in which it has built effective systems to discriminate signal from noise and to test that it has done so.

- Failure to Comprehend (Rationalise): This is where humans have it over most machine systems: our ability to turn a perceived pattern into consistent recognition of a scene in context: we can see a bicycle from pretty much any angle and know that it’s a bicycle, regardless of whether it’s being pushed, ridden by a human, by a fish, or is lying in a pond. Machines aren’t yet terribly good at that. However, lacking sufficient context, both we and machine systems are prone to cognitive red herrings such as pareidolia, the tendency to see patterns where none actually exist – one famous example there being the human face ‘seen’ in a Martian mesa.

- Failure to Respond: if a system has appropriate data and training, has isolated it and rationalised it to a usable degree, it then has to be able to come up with a response that is appropriate, not just to what it’s seeing, but to the context in which it is seeing it. If it’s got a strong match for a given pattern, then it can deal with it. If however, it has to choose between a range of options of similar probability, we’re down to pot luck or an electronic sulk, where it then needs some external mechanism to help resolve the conflict.

And that last is where AIs tend to ‘hard fail’, that is, they don’t have much in the way of fallback mechanisms to either attempt to understand an exception from general principles or to know that they’ve failed in the first place. In other words, they lack any ‘common sense’ mechanism.

We can compensate for that to some extent by using multiple algorithms to look at and ‘vote’ on their interpretation of a data set: here we either end up with a common failure mode (both fail the same way) or divergent outcomes, in which case the system can call for help, be that from another AI, from a ‘dumb’ control system that manages a safe fail or from a human being, who of course may be asleep, drunk or watching TV.

That sort of reversion is what we find in safety critical systems such as autonomous vehicles. Very basic machine learning systems that aren’t in safety- or financially-critical environments tend not to have immediate reversion or confirmatory backup, because it simply doesn’t matter all that much, as long as a) the benefits outweigh the fails by a sufficient margin and b) don’t actually kill people.

So now for today’s2 low-consequence machine learning fail, or at least my best guess as to what’s happened.

If you search for a category of information on Google, it will return a relevant list of matching items, with text, images and links, more or less appropriate to what you asked for. Now these lists aren’t compiled by rooms full of Google wonks, or even third party data processors (both are too expensive for trivial applications) – they’re created by machine learning systems that are trained to associate and categorise data, using data mining, semantic analysis and computer vision to create and present categories of information.

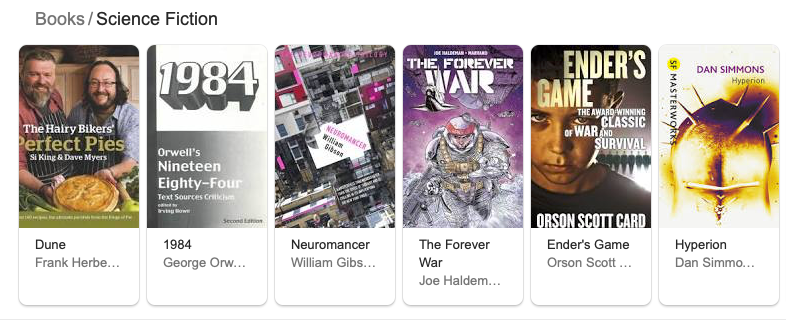

So if you search for, “best sci-fi books”, Google will very helpfully return a list of what its systems think are the highest rated sci-fi books of all time. And this is what it gives you:

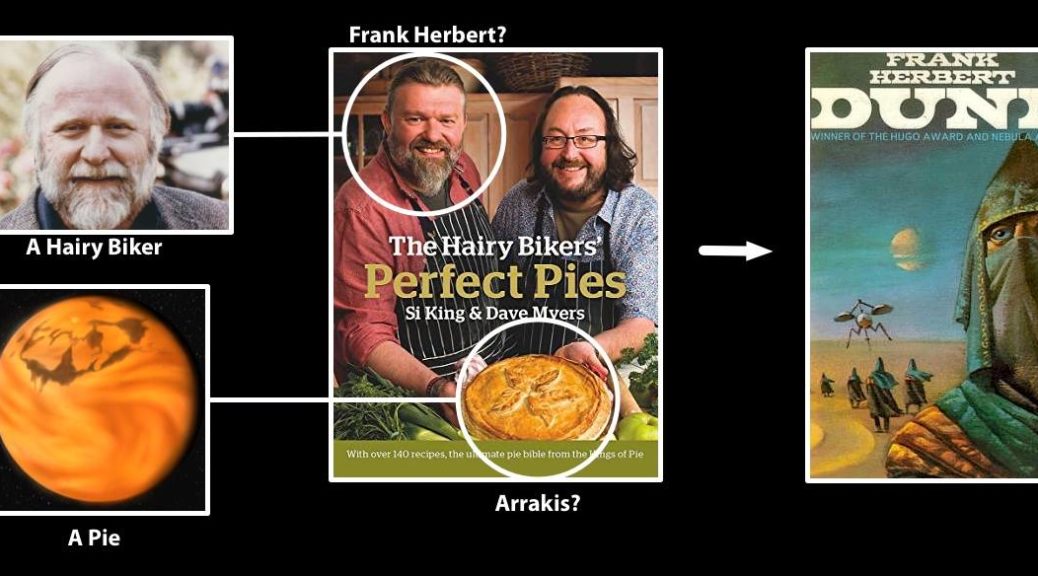

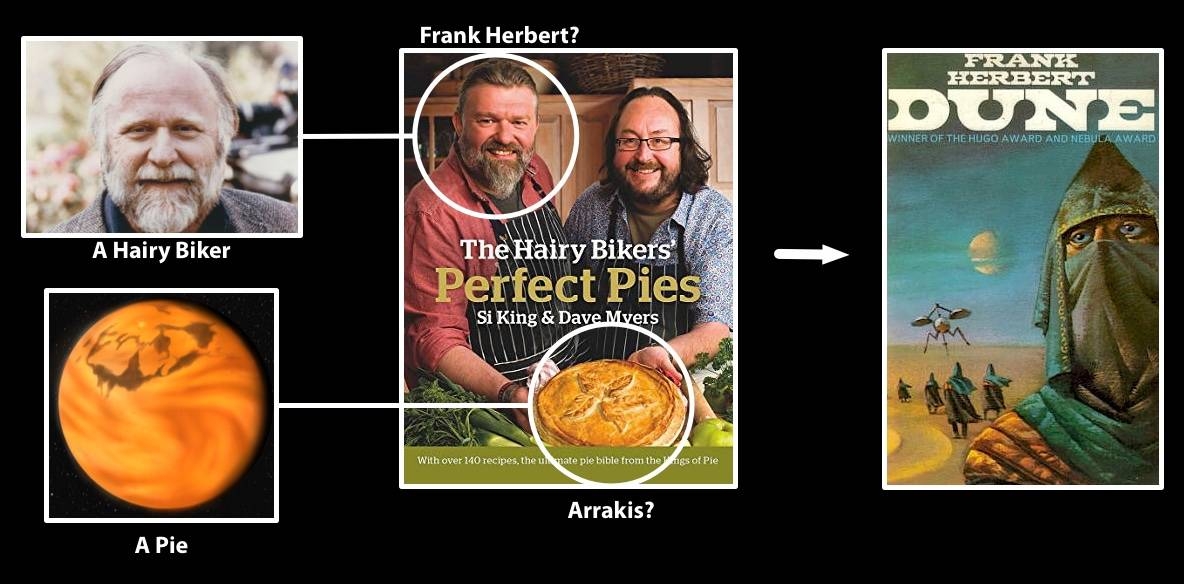

Which looks fine, until you look at the entry for Dune. Wait, What? Just which part of the Hairy Bikers’ Perfect Pies book relates to Frank Herbert’s classic? I was intrigued by this, so had a bit of a dig: the first thing to eliminate was any basic metadata mistake. I couldn’t see anything there, and the ISBNs are sufficiently different that there doesn’t seem to be any possibility of simple mis-keying somewhere along the line. So I looked and thought a bit more, accompanied by more coffee than was perhaps wise. And this is what I’m seeing:

- I don’t see this association of image and title anywhere else, so let’s assume that it’s an artefact of Google’s processes, rather than something it’s inherited from source.

- Both Hairy Bikers are beardie blokes, Si King particularly so.

- Frank Herbert, in his later years, was also a beardie, of similar conformation to Si King.

- Dune (Arrakis) is a desert planet, so is usually depicted in sci-fi art as an orange blob.

- A Hairy Biker’s Perfect Pie is also an orange blob.

- There are a lot of images of covers for any given book out there for an automated system to choose from, so Google’s machine learning system is likely to use the one that scores most highly on multiple factors.

My semi-informed guess then is that there’s an image recognition system in play here, one that’s scoring for fit against multiple matches, in this case for any imagery related to Dune. So we have a single image that combines an image of a beardie bloke who could just be Frank Herbert, AND an orange blob that could score highly as an image of Dune. So there’s a double, high-confidence hit for the image as representing Dune and, voila!, we have a pie-based Geordie universe – more Wye aye! than AI.

The final irony here is that one of the basic premises of Dune’s universe is that it’s one where, following the Butlerian Jihad, all AIs and robots are banned…

- The usual disclaimer: I’m using terms such as ’stupid’ and even ‘artificial intelligence’, whilst entirely accepting that they’re anthropomorphic terms that aren’t really relevant to machine systems. They are however useful analogues for non-technical descriptions.

- With thanks to Sam and Robbie Stamp for passing this to me.