I spend quite a lot of my time doing due diligence on innovation funding applications. I’ve been doing this for rather longer than is comfortable to contemplate so, over the years, I’ve seen progressive tides of hype wash in, fill a few rock pools, and then wash out again, only to re-emerge a few years later – assuming it had any merit in the first place – in a form that actually works as part of the overall problem-solving ecosystem. That innovation-development-hype-disappointment cycle may actually happen several times before the rest of the innovations needed for an idea to gain market traction catch up. That’s certainly been the case with VR and AR, with IoT and, most of all, with ArtificiaI Intelligence (AI).

This time, and setting aside Larry Tesler’s invocation of Zeno’s paradox where AI is regarded as, “anything we haven’t done yet”, we have reached that confluence of developments, computational capability and infrastructure that is finally letting Machine Learning (ML) make real headway in multiple market sectors, enabling systems to deal with progressively more non-deterministic challenges, starting with specific problem domains and slowly becoming more generalised.

But, is that really ‘AI’? No. Like many others, I regard AI as a specific class of Machine Learning, one where the system is capable of self-adapting to handle unresolved inputs, or of at least having enough of a functional ecosystem around it for it to plausibly attempt to do so. Everything else is a trained Machine Learning system that operates within a constrained set of conditions, whether it’s a supervised or unsupervised system. That isn’t to deny the occasionally gob-smacking capability of machine learning/deep learning systems – one that has taught itself to play Chess or Go to world champion level is a stunning achievement, but isn’t something I’d regard as an AI: such systems are capable of generating new behaviours within a constrained problem domain, but the bounding rule set has been pre-defined for them, and the model for expression of those rules has been modelled, tweaked and weighted interminably during the development process (although I’ll allow that, when we start to use one ML system to train another, we’re going into a whole new, and very interesting, place).

So when I read a pitch that tells me that the problem being addressed will be solved by the application of “AI”, I sigh, and start looking for any information that qualifies that statement with enough detail to demonstrate that the pitcher, firstly, actually knows what they’re talking about, and, secondly, that ML (or even AI) is appropriate to the challenge they’re addressing. I will grudgingly allow them the use of ‘Artificial Intelligence’ rather than ‘Machine Learning’ as I accept that not everyone is as much of a revolting pedant as me. But they do need to show both capability and relevance, otherwise there’s a trash can waiting for their application to join its many peers.

Setting aside the actual knowledge possessed by the team (and that’s pretty easy to establish, and worth doing, as too often the pitch is written by the marketeer rather than the tech authority), the big problem comes when AI/ML is proposed, not as part of the solution to a particular class of problem, or to address a particular part of a bigger problem) but as a lazy catch-all substitute for actually analysing the problem.

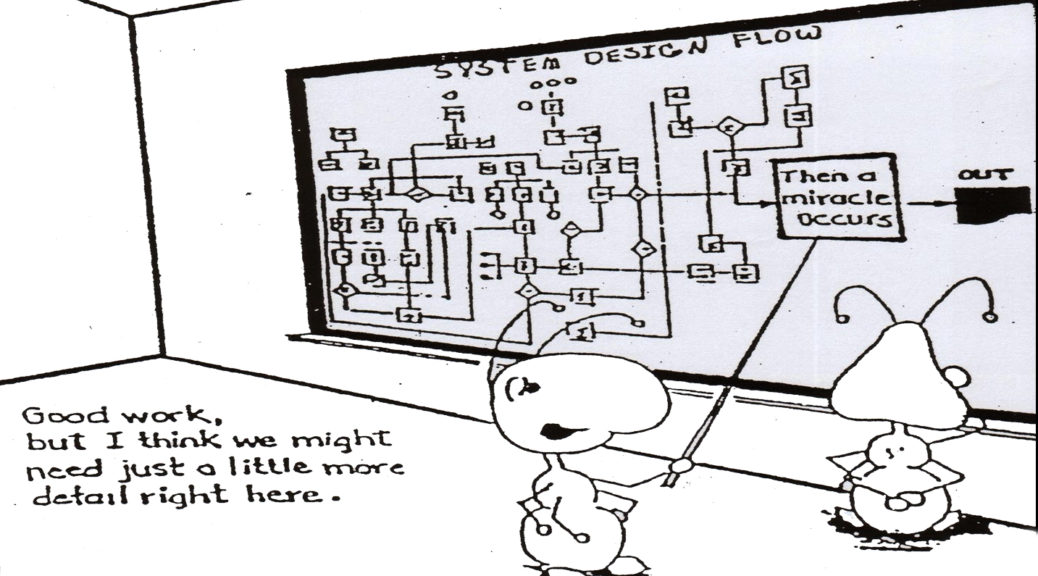

Here, I’m reminded every time of the very first lecture I ever attended in Systems Analysis, given by the sadly late, great Prof. Colin Tully. His opening play was to put up a OHP (yes, it was that long ago) slide of this utterly famous cartoon of a couple of ants (for some reason, it’s nearly always ants – why is it always ants?) staring at a flow chart, in which the final process box before output is simply titled, “Then a Miracle Occurs”. And that’s where far too many projects and start-ups pitching AI are right now: throwing the mot-de-jour around, and either not bothering to, or being incapable of, decomposing the problem sufficiently to show where, why and how ML can be of unique benefit. And they need to go through that process: simply bunging a few technobuzzwords in, without following that process, is simply cringeworthy – e.g. “We’ll use Tensorflow”. Fine, you can spell ‘Tensorflow’. Now show me that you understand why you should be using Tensorflow – make the argument, please.

Here, I’m reminded every time of the very first lecture I ever attended in Systems Analysis, given by the sadly late, great Prof. Colin Tully. His opening play was to put up a OHP (yes, it was that long ago) slide of this utterly famous cartoon of a couple of ants (for some reason, it’s nearly always ants – why is it always ants?) staring at a flow chart, in which the final process box before output is simply titled, “Then a Miracle Occurs”. And that’s where far too many projects and start-ups pitching AI are right now: throwing the mot-de-jour around, and either not bothering to, or being incapable of, decomposing the problem sufficiently to show where, why and how ML can be of unique benefit. And they need to go through that process: simply bunging a few technobuzzwords in, without following that process, is simply cringeworthy – e.g. “We’ll use Tensorflow”. Fine, you can spell ‘Tensorflow’. Now show me that you understand why you should be using Tensorflow – make the argument, please.

Those of you who actually know what you’re talking about, have no fear: as long as you clearly express the problem, why it is best addressed with an ML-based approach and then describe which canon of the broad church of ML you feel most appropriate as an approach, you will stand out like a shining beacon in a rippling sea of bovine ordure.

Just don’t be that ant.