Having had a good whinge about the issues with our interaction with content and the services that deliver it, I’ll now try turning the argument around to ask, “What else could our media experiences be like?“.

Firstly, and given the dead ends the content industry has driven itself into, it’s worth reminding ourselves here of the blindingly obvious: That the internet is very good at getting specific information to individuals, on demand and then responding to their use of and activity around that information. It gets progressively less good as lots of people either request the same information or lots of information at the same time. We are manifestly at the stage where the infrastructure of the internet is often unable to cope consistently with high-demand content and, to get anywhere with it, ever greater investment is needed in the networks, routing and peering infrastructures that get content A to users B…Z * a gazillion. And it’s a sad truism of commercial reality that investment almost invariably lags demand. Broadcast, on the other hand, is a great way of getting the same content to as many people as you like, within the footprint of a particular broadcast system, but of course doesn’t directly know a damn thing about how it’s being used. Well, d’uh – but do hold those basics in mind…

The silos that currently inhibit that elusive customer experience, although probably inevitable, aren’t actually necessary: it didn’t have to be like this and still doesn’t. Other industries have spent huge time and effort to break down functional and knowledge silos, within companies and across entire sectors. Isn’t it time that the content industries did just the same?

So let’s step back a while and see where we could have gone – and indeed could still go, from a time when first principles still applied. Back in 1998, in the Eocene of the Internet, broadband was just emerging, blinking and newborn from the labs into the public sphere and most of us were still on 33Kb dial-up (ask your parents, kids) or, if well flush, ISDN. It was then that Intel went out and bought a TV/datacast satellite. They had, quite reasonably, foreseen that the future lay in the convergence of content and networks and thought it was a game they wanted to be in. The trouble was that, having bought it, they weren’t quite sure what to do with it. Which is where we came in: myself, Douglas Adams and Richard Creasey spent a summer brainstorming the existence and then the future of interactive TV with Intel, probably just around the time the term was being coined. There’s stuff we did then that still doesn’t exist and other parts that I’ve helped create since but there’s been relatively little that, at however coarse a level, we didn’t foresee.

One fundament that I proposed very early on and which we all thought to be such a no-brainer that discussion and review was all of a thirty second job was that broadcast and internet delivery would complement each other and would end up integrated as a seamless whole, in the network and at the end points. I called that the 3D Internet. And there I was about as wrong as I could have been. Our model however did include unified media distribution (from what we’d now call playheads) and edge caching, either on-premise or at ISP level. The latter is exactly what Velocix built successfully a good few years later.

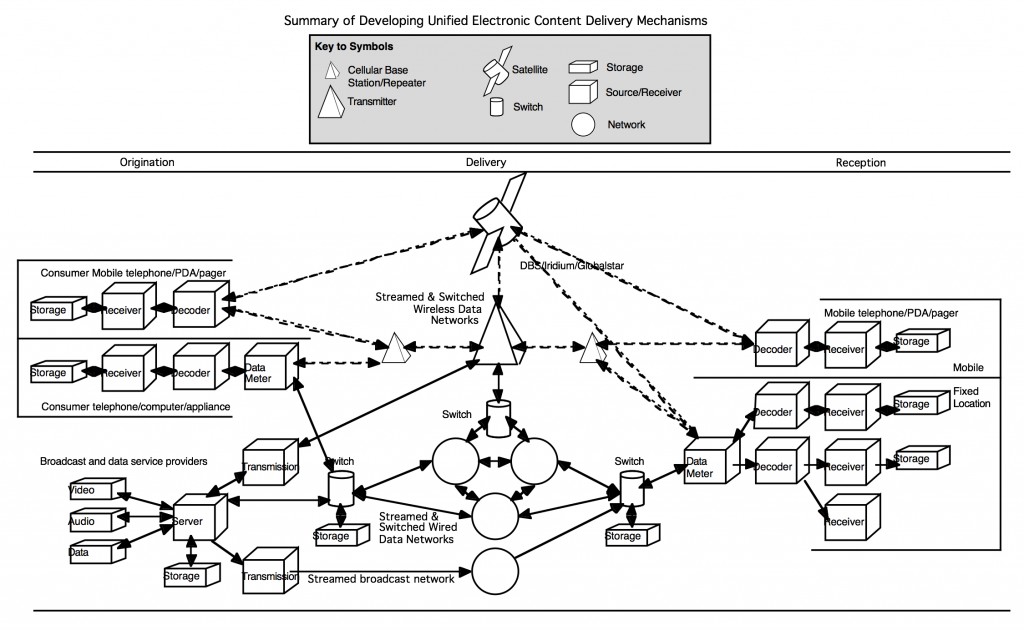

In fact, here’s my summary diagram from the time, entirely unretouched – it shows, within the constraints of our knowledge, how we thought the world of convergence would develop. Some of it has indeed happened; other parts have been overtaken by developments since but there are still areas where we have to ask, “Why didn’t that happen?”.

We understood that Moore’s Law and economies of scale would apply to routing, switching and premises equipment. We understood that fibre, network peering and transit would get ever cheaper. We also assumed that, as demand hit the exponent on the classic facilitation/growth/saturation curve, it would regularly and repeatedly outstrip supply. At the same time, it was obvious to us that the growing digital broadcast infrastructure would lead to commonality of content format, production workflow and therefore the ability to mix’n’match the segmentation, tailoring and delivery of experience over whatever network and to whichever devices were most appropriate at any given time. So why then would you want to go to the expense of sizing your IP networks for full-demand multicast video delivery when broadcast plus unified edge caching was part of the delivery equation?

What we entirely failed to understand of course, despite our industry experience, wasn’t the technology but the level of entrenchment of attitudes, with TV hanging on for grim death to its own rather parochial world view and internet companies even then doing a fine job of creating a new parochialism in very short order: it truly is astonishing how really, really young industries can develop a received wisdom mindset from nowhere and near-as-dammit, overnight.

Using the ‘missing in action’ elements of effective experience, I think it reasonably obvious that – ultimately – the big winners in convergent delivery will be the service companies that crack the interactive, multi-source, multi-screen entertainment market by focussing on a complete a coherent, end-to-end customer experience. This needs to be one that encompasses content accessibility by all means, presentation of and interaction around the content and its metacontent, overall service usability and ease of transaction. That’s something that can be started at the device ecosytem level but, ultimately, it does need that fully integrated, user-invisible and flexible infrastructure to intelligently manages broadcast and IP delivery and integrate local and remote storage.

So it’s not (just) about building feature-laden boxes or walled gardens full of apps on ‘Smart’ TVs: it’s about considering the whole model from the point of view of the poor bloody consumer and building – both competitively and collaboratively, experience commonality and seamless experience ‘segue’ into their offerings. To get there however, we need to revisit the market logjam from the first part of this story and engender two major market disruptions:

Attitude: broadcasters, net corporations, content service providers and device manufacturers need to raise their eyes and look at how their services and devices can co-exist in just such a broad and accessible ecosystem of experience. And this isn’t a case of giving up competitive advantage, they just have to forget that pre-Adam Smith mercantilist axiom of, “For me to succeed, my competition must fail” and consider that a little open commonality and framework building will grow the market overall, will enable whole new classes of service and just make everyone’s life a tad easier. Easy to say, much harder to make happen.

Content Accessibility: Content owners also need to come out of their “All the revenue, all time” bunkers and start to legitimise human behaviour – how people actually want to use and share content. It’s not about condoning piracy, but about addressing the use case for content, something they’ve consistently failed to address since the rise of the cassette recorder in the early 1970s. And they may even thereby (I believe) make more money and create a sustainable content market. Given current attitudes, this is probably the least likely to happen, but it only takes one major player to flip and the whole market will, perforce, have to follow.

If those two logs can be shifted, then I believe that everything else will follow. It won’t happen in time to save my Breaking Bad experience, but it might just happen before either I retire or there is a popular uprising against everything that looks pretty, goes ping a lot and entirely fails to work. It needs to.

3 thoughts on “Failing the Future Part II: The What of the Why”